Artificial Intelligence (AI) has made tremendous strides over the past few years, primarily thanks to the complex algorithms and vast amounts of data that fuel systems like ChatGPT. However, as these models advance, so too does the need to address their inherent limitations, particularly regarding data processing. Researchers from Peking University, led by Professor Sun Zhong, have taken a vital step in this direction by investigating solutions to what is known as the von Neumann bottleneck. Their breakthrough, detailed in their September 2024 paper published in the journal *Device*, introduces a novel dual-IMC (in-memory computing) scheme designed to optimize both speed and efficiency in machine learning processes.

At the core of the von Neumann bottleneck is the dichotomy between the speed of data processing and the speed of data transfer. Traditional computing architectures separate memory storage from processing units. As a result, when large datasets are involved—as is frequently the case in AI applications—the movement of data between these two components can create significant delays. Matrix-vector multiplication (MVM), a crucial operation for neural networks, exemplifies this challenge as it often relies on frequent shuttling of data between memory and processing units. This transfer not only consumes energy but also slows down the computational performance.

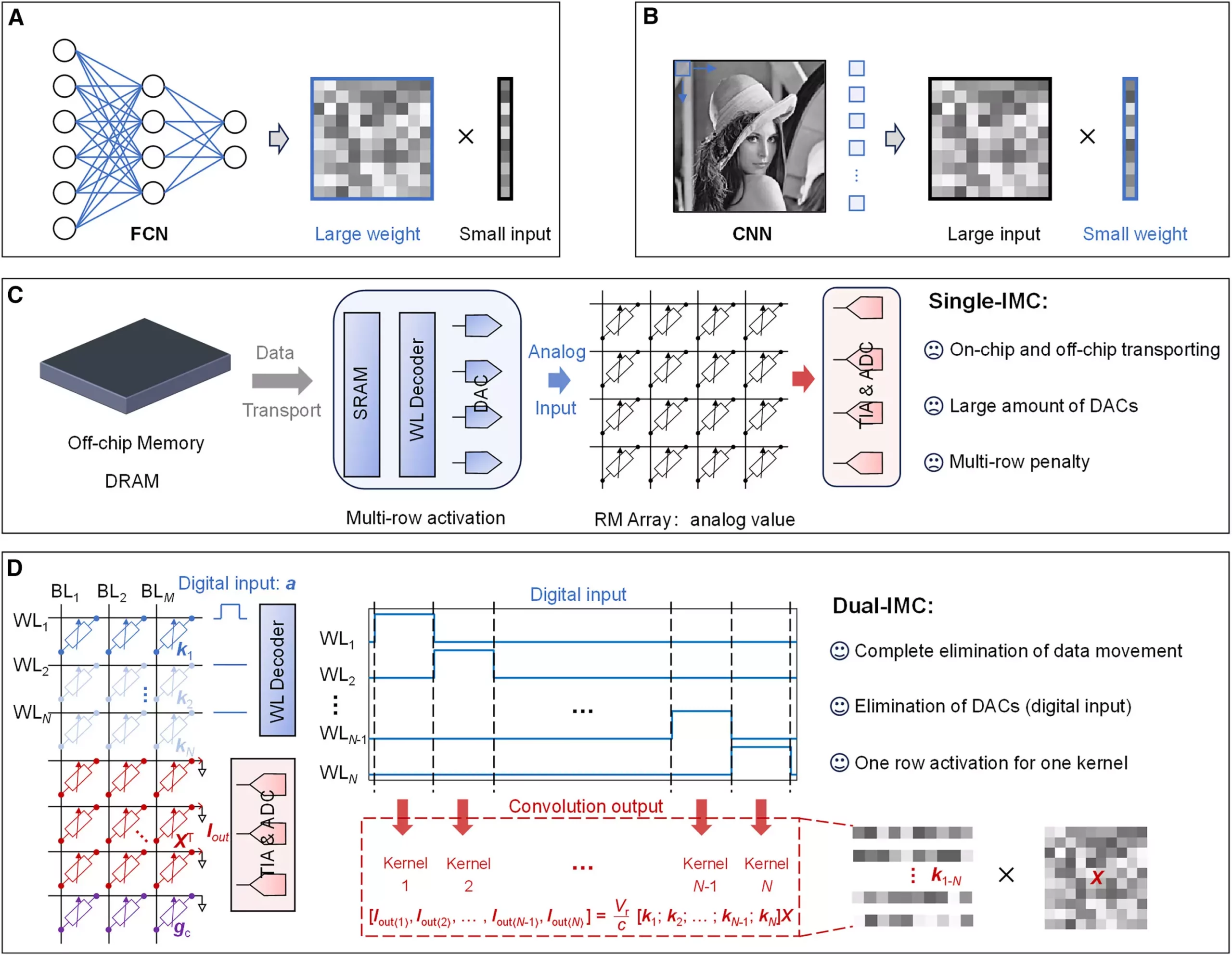

To combat this issue, the research team has pivoted towards a more integrated approach by developing the dual-IMC system. Unlike the conventional single-IMC method, which stores only neural network weights in memory while input data comes from external sources, the dual-IMC keeps both weights and input within the memory array. By leveraging this fully in-memory paradigm, the dual-IMC approach can mitigate the impediments faced by single-IMC systems, setting the stage for a transformative leap in data processing.

The dual-IMC scheme presents numerous advantages that could reshape the landscape of artificial intelligence and computing. First and foremost, by performing calculations entirely within the memory unit, this new method drastically reduces the time and energy typically consumed by off-chip dynamic random-access memory (DRAM) and on-chip static random-access memory (SRAM). Given that a significant portion of energy consumption in current systems is devoted to data movement, the implications of this efficiency are substantial.

Moreover, testing of the dual-IMC on resistive random-access memory (RRAM) devices has shown promises not just in signal recovery but also in image processing tasks. These operations benefit immensely from the high-speed and low-energy requirements that dual-IMC can provide. As the demand for rapid, large-scale data processing continues to escalate in our data-driven world, technologies like dual-IMC could lay the groundwork for more powerful AI systems and applications.

One of the remarkable aspects of the dual-IMC model is its cost-effectiveness. The elimination of digital-to-analog converters (DACs), which are necessary in single-IMC configurations, simplifies circuit design and reduces production costs. The reduced need for supplementary components not only saves on material costs but also decreases the overall footprint of the chips, resulting in lower power requirements and latency. These benefits have important ramifications for industries that rely on fast and efficient computational capabilities, such as telecommunications, cybersecurity, and automated systems.

As we look towards the future, it is clear that the innovations stemming from research on dual-IMC could herald revolutionary advances in both computing architecture and artificial intelligence. The implications stretch beyond mere efficiency; they invite an era where limitations imposed by existing infrastructure can be overcome, allowing for more sophisticated, timely, and energy-efficient AI applications to flourish.

The dual-IMC development spearheaded by Professor Sun Zhong and his team exemplifies a significant advancement in the way we approach data processing within AI systems. By addressing the pervasive von Neumann bottleneck and creating a more efficient architecture, this research not only promises enhanced performance but also paves the way for groundbreaking innovations in computational technology. As the appetite for data grows, the foundational changes delivered by dual-IMC technology could ultimately shape the future of AI, making it faster, smarter, and more energy-efficient.