In the modern era of technology, consumers increasingly find themselves grappling with choices while shopping for food, particularly when selecting fresh produce. It is not uncommon to wish for a tech solution capable of enhancing this decision-making process. Recent research from the Arkansas Agricultural Experiment Station has shed light on how machine-learning algorithms can be fine-tuned using human sensory evaluations to make food quality assessments more reliable. This study, led by Dongyi Wang, aims to bridge the gap between human perception and machine assessment, ultimately paving the way for more intelligent grocery shopping experiences and improved food presentation in stores.

The Challenge of Food Quality Assessment

Typically, human beings possess a sophisticated ability to discern food quality based on visual cues. However, this assessment can be influenced by various factors, including the lighting conditions under which the food is observed. Traditional machine-learning models, while beneficial, often lack the nuanced understanding that humans have. They may fail to adapt adequately to different environmental situations, leading to inconsistent evaluations of food quality. The Arkansas study directly addresses these shortcomings by exploring how human evaluations can enhance the performance of machine-learning models.

Unpacking the Study

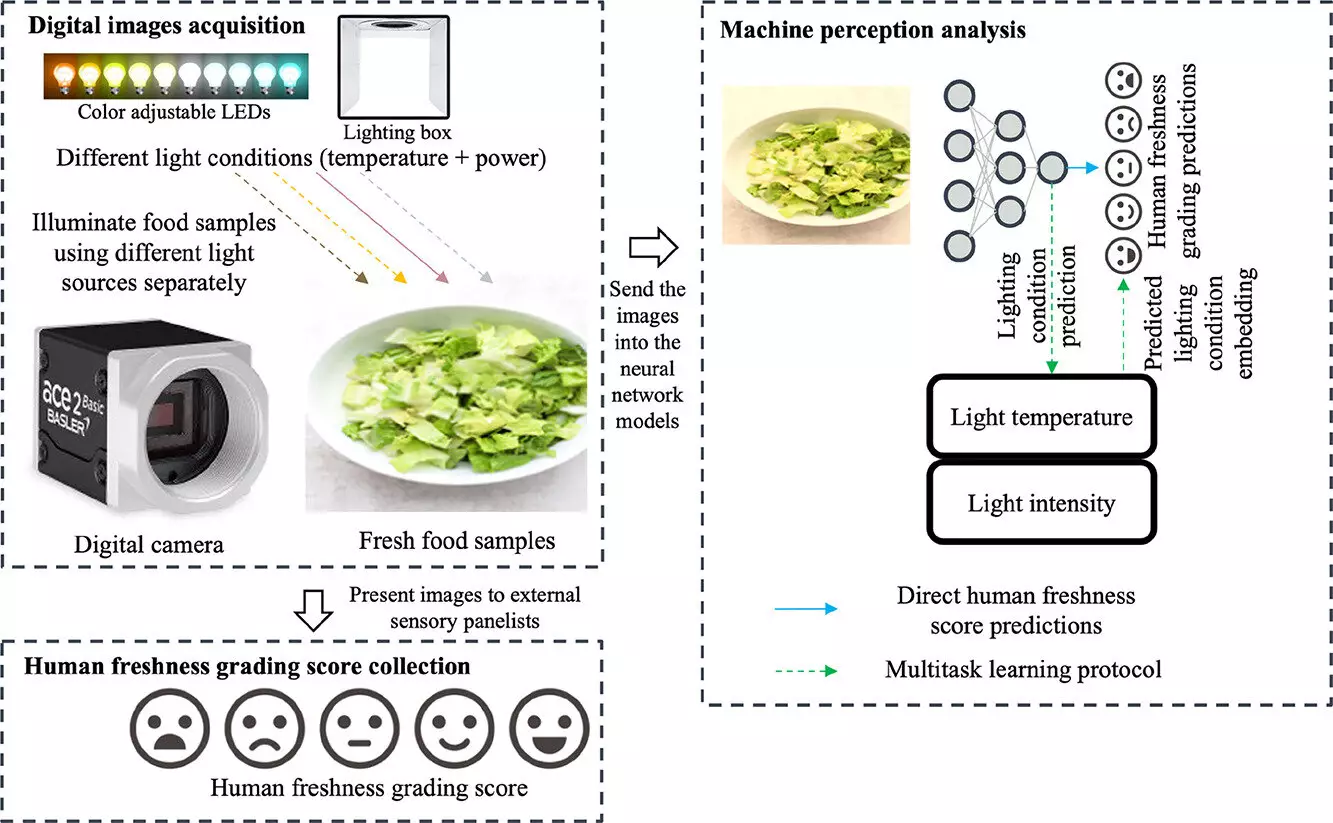

This insightful study published in the Journal of Food Engineering illustrates a remarkable approach to integrating human sensory evaluation into machine learning. Professor Wang and his team focused on Romaine lettuce, capturing a comprehensive dataset of images under varying lighting conditions. In total, they compiled 675 images, taken over eight days, showcasing different levels of freshness and browning. The sensory panel, comprising 109 individuals with normal vision, undertook the responsible task of grading the freshness of these lettuce images. They used a detailed scale ranging from zero to 100 to reflect their assessments accurately.

The critical outcome of this research is the establishment of a direct correlation between human perceptions and machine predictions. Wang’s findings suggest that integrating human sensory evaluations can reduce prediction errors in machine-learning models by nearly 20%. This improvement is particularly significant when contrasting their results with traditional models that neglect the variability inherent in human assessments. The study’s innovative methodology highlights the potential for machine learning to adapt and become more reliable through human insight.

One of the study’s pivotal contributions is its focus on the impact of illumination on human perceptions of food quality. Prior studies have primarily relied on “human-labeled ground truths” without accounting for how different lighting can distort perceptions. Wang’s research challenges this norm, demonstrating that the color temperature and brightness of lighting can significantly influence how fresh produce appears. For example, warmer lighting conditions may mask browning in lettuce, leading consumers to inaccurately assess its quality. This insight offers critical implications for both retail and food processing industries, as better lighting strategies can enhance the perceived quality of products.

Revolutionizing Retail Strategies

As grocery stores strive to optimize their showcases, the insights gleaned from this study offer practical solutions. Armed with a better understanding of how lighting affects perceptions, retailers can strategically design their layouts to enhance the appearance of fresh produce. Moreover, food processors can adapt their machine vision systems to reflect these findings, leading to improved quality control standards. Ultimately, the ability to predict food quality through a combination of human input and machine learning could revolutionize how food products are evaluated, ensuring that consumers make well-informed choices.

While the primary focus of the Arkansas study was food quality assessment, the implications of its findings stretch far beyond fresh produce. Wang suggests that this innovative approach can be applied to various fields, including jewelry evaluation. As machine vision technologies continue to evolve, utilizing human insights to train these systems can enhance their reliability across industries where aesthetics play a critical role.

The Arkansas Agricultural Experiment Station’s groundbreaking study highlights the potential of integrating human perceptual data into machine-learning models to achieve consistency and accuracy in food quality assessments. Dongyi Wang and his team have initiated a promising conversation about the synergy between human sensory experiences and technology. This research not only opens the door to advanced grocery shopping experiences but also illustrates the broader implications for other industries. As we advance, leveraging the strengths of both human insight and machine learning will be key to improving quality evaluations in various fields.