The advent of artificial intelligence in recent years has fostered both excitement and concern about its implications for society. With the European Union’s AI Act coming into effect on August 1, there are significant changes in the legal landscape surrounding AI applications. This legislation aims to define the boundaries of AI development and usage, particularly focusing on the protection of users in sensitive areas. With insights from experts like Professor Holger Hermanns from Saarland University and Professor Anne Lauber-Rönsberg from Dresden University of Technology, the nuances of how this law affects programmers are becoming clearer.

The AI Act is a comprehensive piece of legislation that contains 144 pages of regulations guiding the development and deployment of AI technologies across the European Union. Its primary objective is to create a structured framework that ensures AI systems are safe, ethical, and largely free from bias, particularly in applications that can significantly impact individuals’ lives, such as hiring processes or medical assessments. The pivotal question that arises for software developers is, “What does this mean for my day-to-day work?”

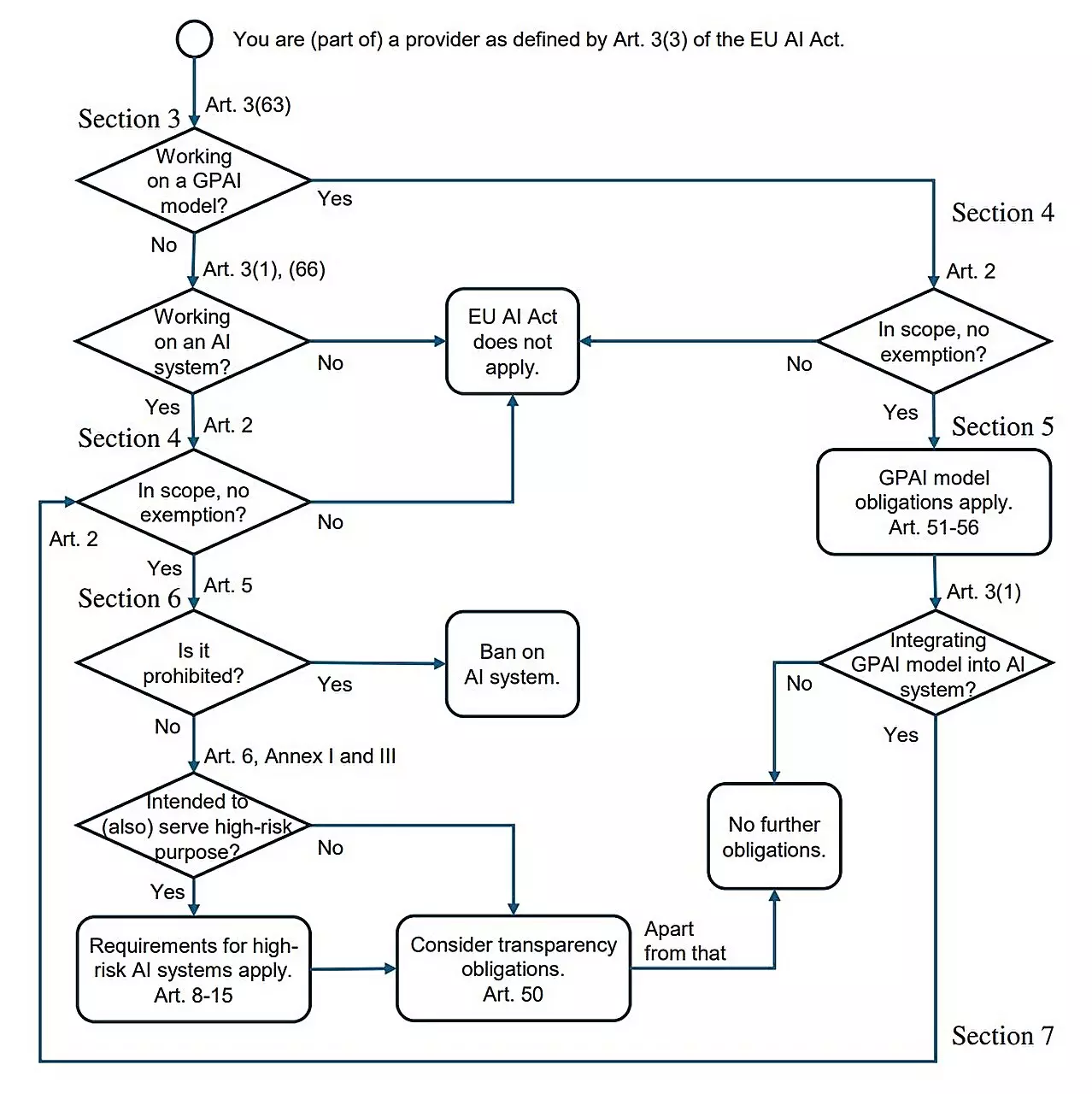

Few programmers have the time or inclination to comb through the entire legal text, leaving many in a state of uncertainty. Hermanns emphasizes that the core of their research, encapsulated in the paper “AI Act for the Working Programmer,” seeks to bridge this gap by addressing what these developers need to know to navigate the new landscape effectively.

A crucial element of the AI Act is the distinction it draws between high-risk and low-risk AI applications. According to Sterz, one of the researchers involved in the study, the majority of software developers might not feel immediate impacts from the legislation. The Act predominantly revolves around systems categorized as high-risk, such as those involved in employment, credit rating, and education, which face stricter regulatory scrutiny.

For example, developing an AI tool to analyze job applications requires developers to ensure fairness and prevent discrimination based on biased data sets. In contrast, creating AI for non-sensitive tasks—like simulating characters in video games—allows programs to be developed with much less concern over compliance with the AI Act. This dichotomy allows a considerable portion of the programming community to continue innovating without heavy legal burdens, while also urging those involved in high-stakes applications to implement robust oversight procedures.

For developers engaged in building high-risk AI systems, compliance with the AI Act entails several rigorous requirements. These include ensuring that the training data is relevant and unbiased, maintaining comprehensive logs of system operations, and documenting how the AI functions in a manner that is accessible to users. Hermanns elaborates that effective monitoring is paramount; like flight data recorders in aircraft, logs should provide insight into the AI’s decision-making process in order to facilitate error correction and accountability.

The documentation must also be sufficiently detailed for users and regulators to comprehend the AI’s operational mechanics. As discussions around sufficient human oversight continue, providing explicit documentation creates a foundation for trust and transparency that is critical for AI technologies.

While the AI Act introduces constraints, its impact on the broader software industry might be less severe than anticipated. According to Hermanns, illegal activities associated with AI, such as employing algorithms for facial recognition to interpret emotions, remain outlawed, suggesting a degree of continuity in the operational environment for programmers. AI systems that do not encroach on sensitive applications, such as those used for entertainment or nuisance prevention, will likely experience minimal disruption.

Moreover, the AI Act does not impose restrictions on the research and development phase, allowing for continued innovation within the EU. This absence of barriers assures programmers that they can experiment freely within a regulatory framework, fostering a balanced approach to legislation that promotes both safety and innovation.

As the digital landscape continues to evolve, the European Union’s AI Act stands as a pioneering legislative framework that seeks to ensure that the advancements in AI technology align with ethical guidelines and user safety. With insights from leading researchers, it becomes evident that while there are significant constraints on high-risk AI systems, the majority of programming activities remain largely unaffected. This strategic regulation ensures a balanced approach, promoting technological advancements while mitigating potential risks—an essential step for the responsible development of AI in Europe and beyond.