In a landmark advancement for the field of quantum computing, researchers from the University of Chicago’s Department of Computer Science, alongside collaborators from the Pritzker School of Molecular Engineering and Argonne National Laboratory, have unveiled a groundbreaking classical algorithm capable of simulating Gaussian boson sampling (GBS) experiments. This innovative framework serves not only to interpret the intricacies of existing quantum technologies but also to enhance our comprehension of the synergistic potential shared between classical and quantum computing methodologies.

Gaussian boson sampling has rapidly garnered interest as a promising avenue towards demonstrating quantum advantage—an era where quantum systems can execute tasks that remain astronomically inefficient or impossible for classical computers. Despite initial assertions of quantum supremacy, real-world experiments often encounter noise and losses that complicate outcomes and raise essential questions regarding the efficacy and reliability of quantum operations.

The research journey leading to this development has been rich in experimental challenges aimed at stretching the limits of quantum systems. Assistant Professor Bill Fefferman articulated the complexities introduced by noise and photon loss inherent to actual experiments, emphasizing the critical need for a nuanced analysis of these phenomena. Previous investigations demonstrated that while quantum devices yield outputs congruous with GBS expectations, environmental disturbances frequently muddle these results, casting doubt upon previously proclaimed achievements in quantum advantage.

Fefferman noted that the interaction between noise and quantum performance demands careful exploration, highlighting its implications for the practical applications of quantum computing. “While theoretical models show a distinct advantage for quantum systems, real-world experiments inherently complicate these claims due to noise interference,” he stated. This insight serves as a springboard for researchers to refine the existing quantum processes to improve their potential utility.

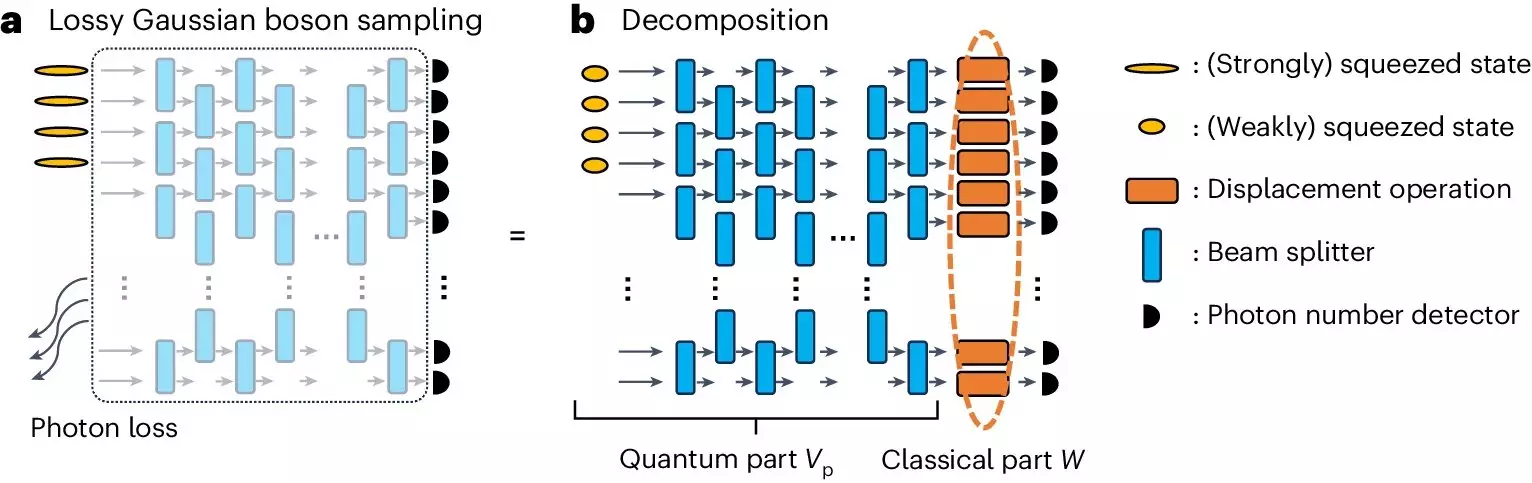

The classical algorithm developed by the research team adeptly navigates the turbulence created by high photon loss rates typically encountered in GBS experiments. By implementing a classical tensor-network approach that is finely tuned to account for the behavior of quantum states amid noisy conditions, the simulation achieves enhanced efficiency and greater accuracy. Remarkably, the algorithm outperformed several state-of-the-art GBS experiments in various benchmarks, marking a pivotal moment in the dialogue surrounding the real advantages of quantum computing.

Fefferman emphasized that this finding should not be misunderstood as a failure of quantum computing but rather as an opportunity to re-evaluate its capabilities. “What we witness is a fruitful exchange between classical and quantum approaches that encourages further algorithmic advancement and a deeper understanding of computational limits,” he highlighted.

By precisely modeling the ideal distribution of GBS output states, the new algorithm raises essential questions about the authenticity of existing claims regarding quantum advantage in current experiments. This newfound clarity paves the way for refinements in the design of future quantum experiments, indicating that strategies focused on optimizing photon transmission and increasing the availability of squeezed states can significantly enhance experimental outcomes.

The ramifications of this work extend beyond the confines of quantum computing, with the potential to influence diverse fields, including cryptography, materials science, and drug discovery. For instance, breakthroughs in secure communication could emerge from advances in quantum techniques, fostering enhanced data protection protocols. In materials science, refined quantum simulations are poised to unveil an array of novel materials with extraordinary properties, thereby driving innovation in energy storage and manufacturing.

The integration of quantum and classical computing embodies a crucial aspect of advancing both technologies. The collaborative framework established through this research highlights the importance of harnessing strengths from both spheres to address modern computational challenges. This collaboration supports progress in optimizing supply chain dynamics, bolstering artificial intelligence algorithms, and enhancing climate modeling accuracy.

Fefferman, alongside colleagues such as Professor Liang Jiang and former postdoc Changhun Oh, has contributed significantly to this field through a series of interconnected studies since 2021. Their ongoing research has scrutinized the computation capabilities of noisy intermediate-scale quantum (NISQ) devices, illuminating the ramifications of photon loss on simulation efficiency.

In their continuous exploration, they have pioneered classical algorithms that delve into graph-theoretical challenges and intricate quantum chemistry problems, revealing that classical methods might perform comparably to certain quantum configurations. These investigations solidify an evolving narrative that challenges traditional notions regarding superiority between quantum and classical paradigms.

The development of the classical simulation algorithm marks a significant leap forward in our understanding of Gaussian boson sampling and heralds a future where the complexities of quantum computing become more navigable. This pertinent research paves the way for future breakthroughs and practical applications, illustrating that the pursuit of quantum advantage is not merely an empirical quest but a critical component of advancing technologies essential for solving intricate problems across numerous sectors. As researchers continue to delve into the interplay between quantum and classical realms, they forge an essential bridge that could redefine the limits of computational science.