In the ever-evolving world of artificial intelligence, large language models (LLMs) have reshaped how we interact with technology, capable of generating human-like text and solving complex queries. However, as these models grow in complexity, so do the challenges of ensuring that their outputs are both accurate and contextually relevant. A significant leap in this arena is the recent development of the Co-LLM algorithm by researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). This innovative approach not only amplifies the efficiency and accuracy of LLMs through collaboration but also mirrors human-like decision-making in recognizing when expert input is needed.

Imagine a situation where a person is asked a question in their area of expertise but lacks complete information. Instead of stumbling through the answer, it would be logical to reach out to a more knowledgeable peer. Similarly, LLMs face this dilemma; despite their capabilities, they often find themselves uncertain about specific aspects of complex inquiries. Previous models were limited in their ability to self-assess and understand when to seek assistance from a more specialized model. This limitation can lead to the generation of inaccurate or incomplete responses, especially in intricate domains such as medicine or mathematics. By introducing Co-LLM, researchers aim to refine how LLMs navigate the labyrinth of information and determine when additional expertise is warranted.

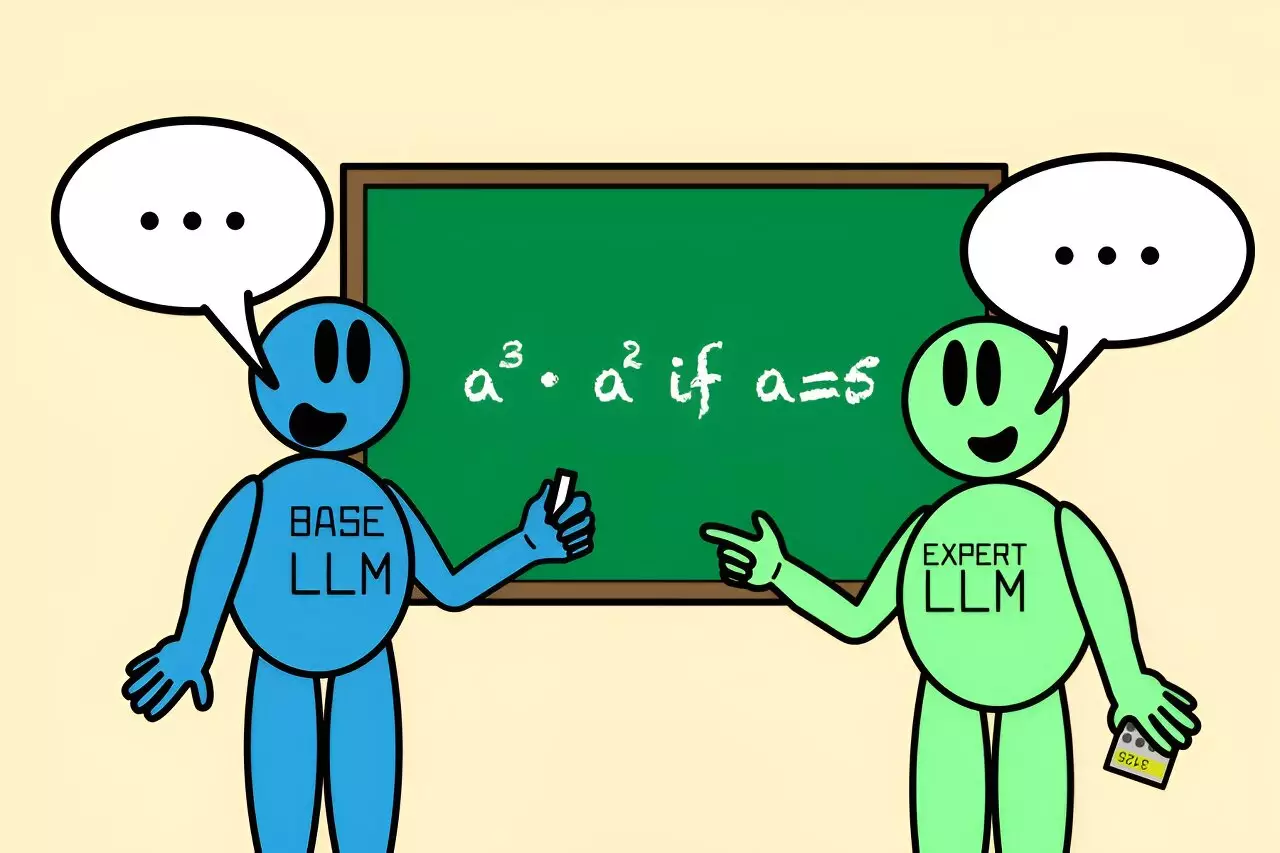

At its core, Co-LLM operates by linking a general-purpose LLM with a specialized model tailored for specific domains. The process is initiated when the general model generates a response; here, Co-LLM meticulously evaluates each word or token produced. If the base model identifies areas where its expertise falls short, it effectively ‘phones a friend’—the specialized model—to enhance the accuracy of its output. This leads to more dependable responses, particularly in fields demanding high levels of precision, such as medical diagnoses and mathematical problem-solving.

The sophistication of Co-LLM lies in its utilization of a mechanism termed the “switch variable.” This variable acts as a project manager, discerning the competence of various parts of the base model’s response. It detects when areas require the specialized model’s intervention, thereby facilitating a seamless amalgamation of general knowledge with expert insights. This collaborative effort not only increases the quality of the responses but also conserves computational resources by engaging the expert model only when necessary.

The practical implications and advantages of Co-LLM are particularly salient when analyzing its performance against conventional models. For example, in a hypothetical scenario where a general LLM attempts to describe the composition of a prescription drug, it may generate inaccuracies due to a lack of specialized knowledge. However, with Co-LLM, the general model will request the necessary information from a specialized biomedical model, resulting in a much more precise and reliable answer.

Moreover, Co-LLM’s potential extends to complex arithmetic tasks. A case in point includes a math problem where a traditional model mistakenly concludes an answer with 125, but when paired with an expert model, the collaboration yields the correct result of 3,125. This level of accuracy is not just marginally better; it markedly outperforms standard models that work in isolation either without specialization or fine-tuning.

One of the promising attributes of Co-LLM is its potential for continuous improvement and adaptability. Researchers are contemplating enhancements that would allow the model to incorporate feedback and corrections proactively. Should the expert model provide inaccurate information, Co-LLM could develop mechanisms to course-correct and provide satisfactory outputs based on more reliable data.

Furthermore, the provision for updates to the specialized models as new information becomes available stands to keep this AI system perpetually relevant and informed, akin to maintaining a dynamic knowledge base. This continuous learning capability could be harnessed to improve not only individual queries but also larger enterprise applications, making Co-LLM a versatile tool in navigating complex datasets or technical documents.

The Co-LLM framework represents a significant advancement in LLM technology, emphasizing the importance of collaborative intelligence in AI systems. By emulating human-like decision-making processes, Co-LLM enhances the interaction between general and specialized models, ensuring a more accurate and efficient output. As developments in this field progress, we can envision a future where AI systems more adeptly collaborate, leading to comprehensive solutions informed by a diverse range of expertise. This marks a crucial step forward in the quest for AI that not only understands but can also accurately interpret and respond to the complexities of human inquiry.