In recent years, the integration of deep learning models into various sectors such as healthcare, finance, and scientific research has transformed the way data is analyzed and predictions are made. These advanced models, including architectures like GPT-4, require immense computational power, often facilitated through cloud-based servers. However, this dependence on cloud infrastructure raises considerable security concerns, particularly in high-stakes environments like healthcare, where patient privacy is paramount. In this context, researchers at MIT have made strides in enhancing data security through a pioneering quantum-based protocol tailored for deep learning applications.

As deep learning continues to evolve, so do the risks associated with it. Traditional methods of data processing and model deployment can expose sensitive information to potential breaches. For instance, healthcare organizations are understandably cautious about utilizing AI tools that process confidential patient records. The fear of unintentional data exposure can stifle innovation and keep beneficial technologies out of crucial sectors. To address these pressing concerns, the focus must shift toward establishing robust security protocols that can protect sensitive data without impeding the functionality of deep-learning models.

The research conducted by MIT scientists employs quantum mechanics as a foundation for developing an innovative security protocol. By harnessing the unique properties of quantum light, the protocol ensures that any data transmitted between clients and servers remains impervious to interception. At the heart of this approach is the no-cloning principle of quantum information, which states that quantum data cannot be perfectly replicated. This principle serves as a cornerstone for preserving the confidentiality of both patient data and the proprietary algorithms of deep-learning models.

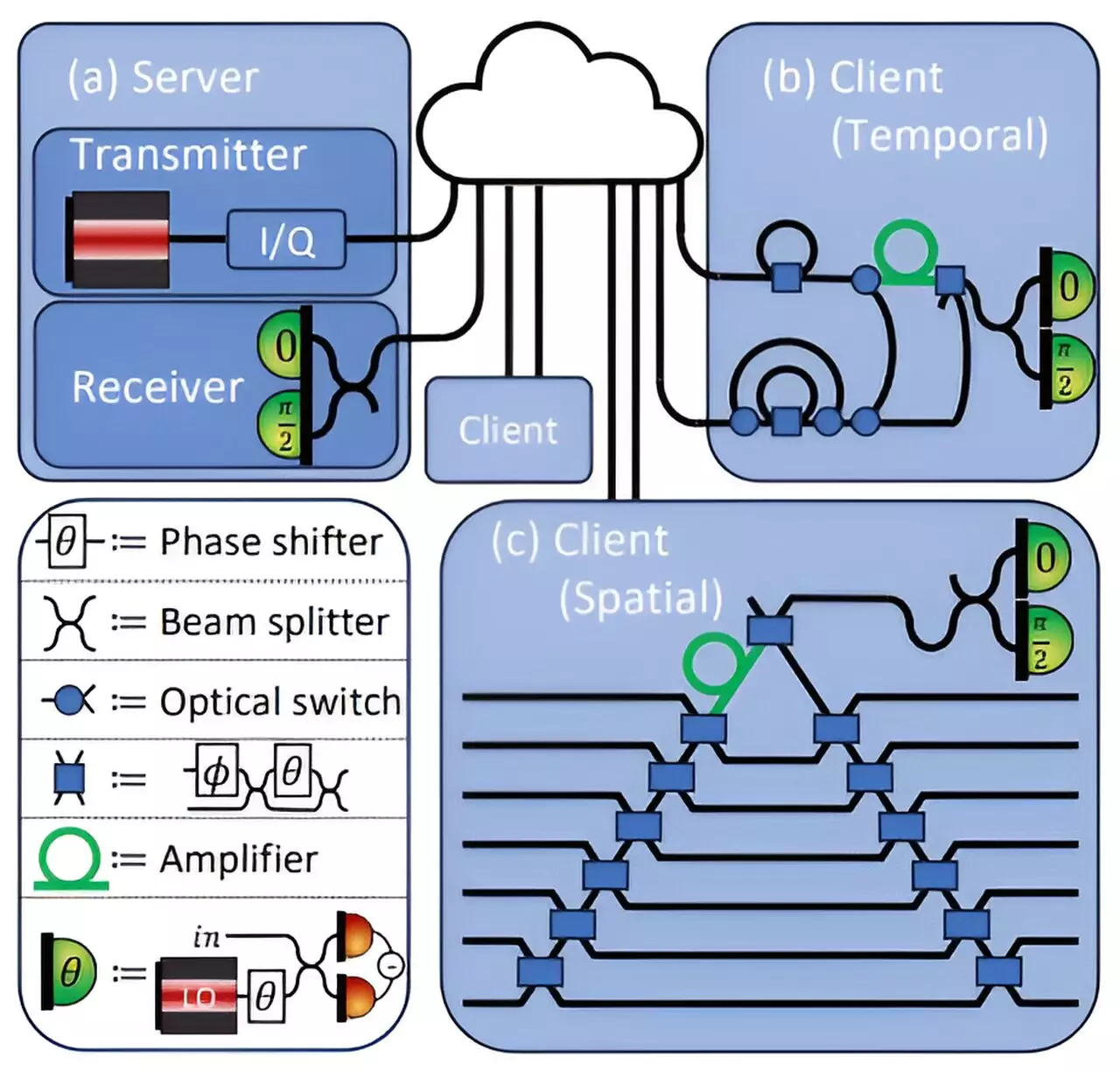

In practical terms, the MIT protocol involves a system in which a client, equipped with confidential data (like medical images), interacts with a deep-learning model hosted on a central server. During this interaction, the client seeks to extract valuable insights—such as cancer predictions—while maintaining strict control over the privacy of the data shared. The server, on the other hand, strives to keep its deep-learning model’s underlying structures confidential.

Here’s how it operates: the server encodes its model’s weights into an optical field using laser light, enabling secure data transmission. Clients measure only the specific light needed for their computations, minimizing unnecessary exposure of sensitive information. Importantly, any residual light returned to the server allows it to monitor for potential data leaks, while still ensuring no sensitive client data can be discerned.

The researchers conducted tests demonstrating that their quantum security protocol could maintain an impressive accuracy rate of 96% when applied to deep learning tasks. This indicates that substantial security can be achieved without sacrificing model performance—a dual necessity for real-world applications.

Moreover, the protocol operates under conditions that allow for two-way security. A client using the system is assured that their data remains confidential while concurrently protecting the server’s intellectual property. This two-fold protective measure is vital for fostering trust between clients and cloud-based service providers.

Looking forward, the potential applications of this work extend beyond traditional deep learning frameworks. The researchers are interested in exploring federated learning, where decentralized data contributions from multiple parties are used to train a central model without exposing individual datasets. Furthermore, the protocol could pave the way for quantum operations that enhance both the accuracy and security of computations.

Research director Eleni Diamanti notes that this blend of disciplines—quantum key distribution and deep learning—creates a new paradigm for privacy in data architectures. The implications of such advancements could significantly reshape how sensitive industries like healthcare leverage AI technologies while upholding the highest standards of data protection.

The development of quantum security protocols for deep learning marks a significant leap forward in safeguarding sensitive information. By embedding quantum mechanics into data transmission processes, MIT researchers provide a compelling solution to the growing concerns surrounding data privacy in cloud-based computations. As industries increasingly adopt these technologies, the intersection of quantum physics and artificial intelligence could herald an era of secure and efficient data analysis, fostering confidence in the use of deep learning across vital sectors.