As artificial intelligence continues its rapid ascent, the infrastructure supporting it faces mounting pressure. Data centers, the backbone of modern digital life, are burgeoning in size and energy consumption. Current cooling methods—fans and liquid coolants—are increasingly inadequate, leading to prohibitively high energy costs and environmental concerns. The stark reality is that as AI-powered applications become ubiquitous, the energy demands of data centers will escalate exponentially if innovations are not embraced swiftly. This crisis underscores the urgent necessity for revolutionary cooling technologies that can keep pace with AI’s expansion without draining our planet’s resources.

Innovative Approaches: The Promise of Two-Phase Cooling

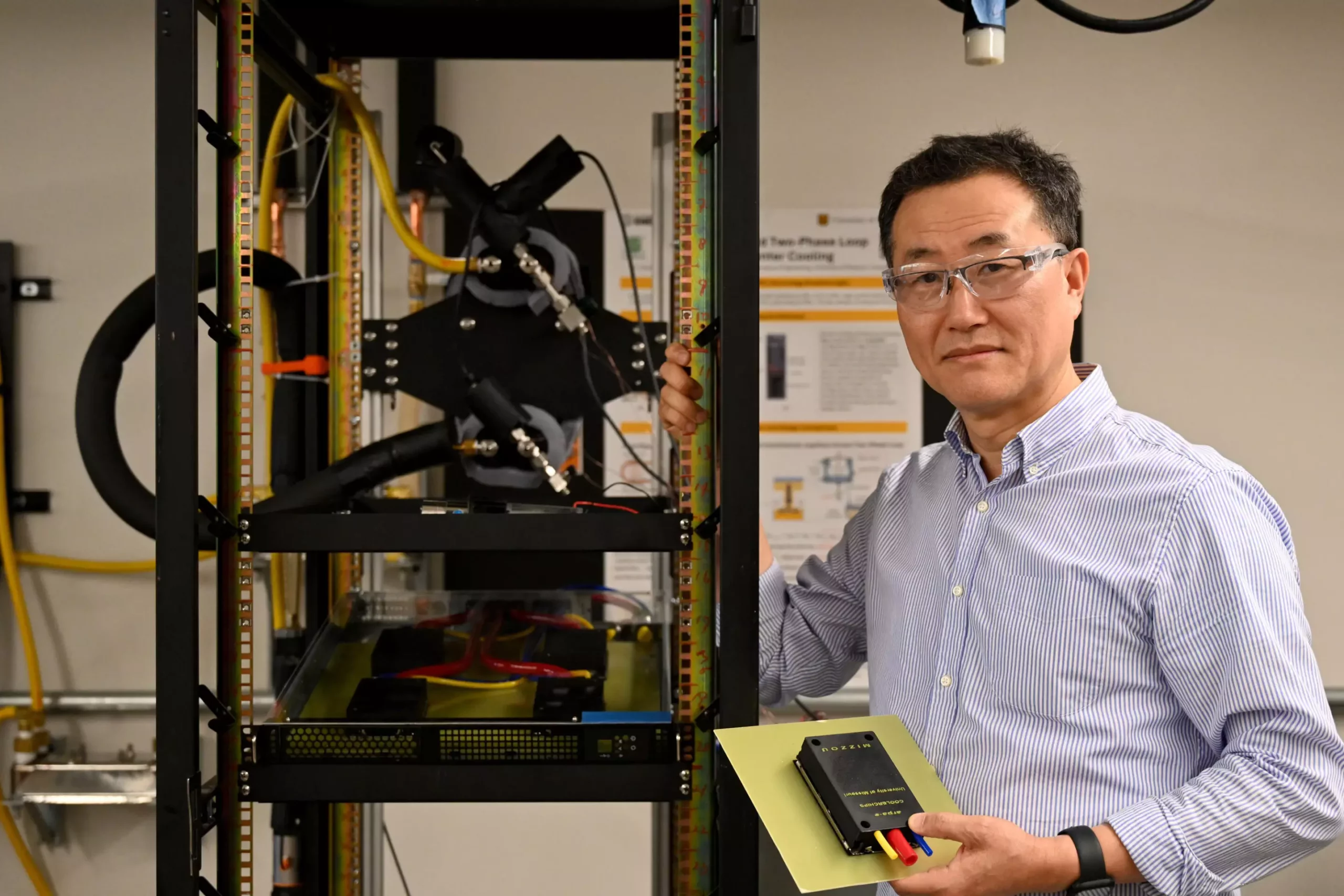

Enter Professor Chanwoo Park and his team at the University of Missouri, whose pioneering work offers a beacon of hope. They are developing an advanced two-phase cooling system that leverages phase change—similar to boiling water into vapor—to dissipate heat more efficiently. Unlike traditional methods, this system doesn’t rely solely on energy-consuming fans or pumps. Instead, it passively uses the physics of boiling on a finely engineered porous surface, dramatically reducing energy waste. When additional cooling is necessary, a minimal mechanical pump is activated, consuming negligible energy compared to conventional cooling solutions.

The core innovation lies in the system’s unique ability to adapt dynamically to varying heat loads. During low-demand periods, it operates passively without any energy input; when heat increases, the pump kicks in just enough to handle the excess. This intelligent, hybrid approach could serve as a scalable blueprint for future data centers—minimizing environmental impact and cutting substantial costs associated with cooling.

Potential Impact on Sustainable Tech and Future AI Infrastructure

What makes this development truly compelling is its scalability and compatibility with existing data center architecture. Designed for easy integration into server racks, the system could be adopted widely across the industry, fostering a paradigm shift toward greener computing. As data centers currently account for over 4% of U.S. electricity consumption—40% of which goes to cooling—such innovation could drastically reduce our carbon footprint.

Beyond energy savings, this approach signals a new era where advanced thermal management becomes a standard feature, not an afterthought. The timing couldn’t be better; with AI entrenched in every facet of daily life, the demand for efficient, sustainable infrastructure must keep pace. Park’s work is not merely a technical breakthrough but a visionary stride toward aligning technological progress with environmental stewardship.

Furthermore, initiatives like the Center for Energy Innovation demonstrate that collaborative interdisciplinary research is essential for tackling these complex challenges. By integrating insights from thermal engineering, energy science, and AI technology, we can accelerate the development of holistic solutions that address both performance and sustainability.

Why This Innovation Matters Now More Than Ever

The urgency to revolutionize cooling technologies cannot be overstated. Traditional methods hit a ceiling in efficiency and scalability, threatening to bottleneck AI evolution itself. If data centers become prohibitively expensive or environmentally damaging to cool, the growth and accessibility of AI could be hampered. Technology should progress in harmony with ecological health, not at odds with it.

Park’s approach exemplifies how targeted engineering solutions, grounded in scientific principles, can disrupt entrenched industry standards. The passive component of the system ensures energy savings without sacrificing performance, making it a forward-looking design that anticipates future demands. As AI systems grow in complexity and size, we must transition to smarter, cleaner cooling solutions that can be scaled globally—this innovation points the way forward.

In essence, this breakthrough emphasizes a broader shift—a recognition that sustainable growth in technology depends heavily on energy-efficient infrastructure. The future of AI hinges not just on algorithms and hardware, but on the environmental accountability of the entire ecosystem supporting it. Advancements like Professor Park’s cooling system will be pivotal in setting that sustainable foundation, ensuring our digital society doesn’t leave a trail of excessive energy consumption in its wake.