The evolution of artificial intelligence has ushered in an era marked by the advent of Large Language Models (LLMs). These sophisticated systems, exemplified by tools like ChatGPT, have permeated our daily routines, facilitating a myriad of tasks from basic queries to complex decision-making processes. An enlightening article published in *Nature Human Behaviour* by an interdisciplinary team led by researchers from Copenhagen Business School and the Max Planck Institute for Human Development highlights both the transformative potential and the inherent risks of LLMs in the context of collective intelligence—the ability of groups to pool knowledge and work collaboratively towards shared goals.

Humans are inherently social creatures who thrive on collaboration. The concept of collective intelligence encapsulates the idea that a group can outperform individual members through shared knowledge and skills. This phenomenon is observable in various settings, from small-scale workplaces where diverse ideas fuel innovation, to large platforms like Wikipedia that harness the collective input of millions. By bringing together a multiplicity of perspectives, collective intelligence creates richer, more nuanced solutions to complex problems. In this light, the introduction of LLMs poses significant implications for how we navigate information and decision-making as a society.

One striking advantage of LLMs is their ability to democratize access to information. These models can facilitate communication across language barriers through translation capabilities, as well as enhance written expression via drafting and editing assistance. Such functions empower individuals from varied educational and cultural backgrounds to engage meaningfully in discussions that require nuanced understanding. Moreover, LLMs can serve as catalysts for idea generation, bridging gaps in knowledge by summarizing different viewpoints and presenting relevant data that fosters more informed consensus-building.

As Ralph Hertwig, a co-author of the article, suggests, the integration of these models within collective decision-making frameworks could lead to more efficient processes. The capacity of LLMs to handle vast amounts of information in real time can speed up discussions and encourage diverse contributions, ultimately enriching the dialogue. However, this potential must be balanced with an understanding of the accompanying risks.

Identifying Risks of LLM Utilization

While the advantages of LLMs are evident, their use is not without drawbacks. A significant concern revolves around the possible erosion of intrinsic motivation to contribute to communal knowledge repositories like Wikipedia or Stack Overflow. The ease with which LLMs can generate content may discourage personal engagement and reduce the diversity of inputs that are vital for a comprehensive knowledge base. If users become overly reliant on LLMs, we risk homogenizing the breadth of information and perspectives available in digital spaces.

Furthermore, the issue of “false consensus” emerges as a critical challenge. This term refers to situations wherein individuals mistakenly believe that their views are more widely accepted than they truly are, primarily due to how LLMs reflect aggregate online data. As Jason Burton, lead author of the study, highlights, the tendency of these models to amplify majority views could inadvertently sideline minority opinions. Consequently, the health of public discourse could deteriorate, as marginalized viewpoints struggle for visibility amidst a backdrop of perceived agreement.

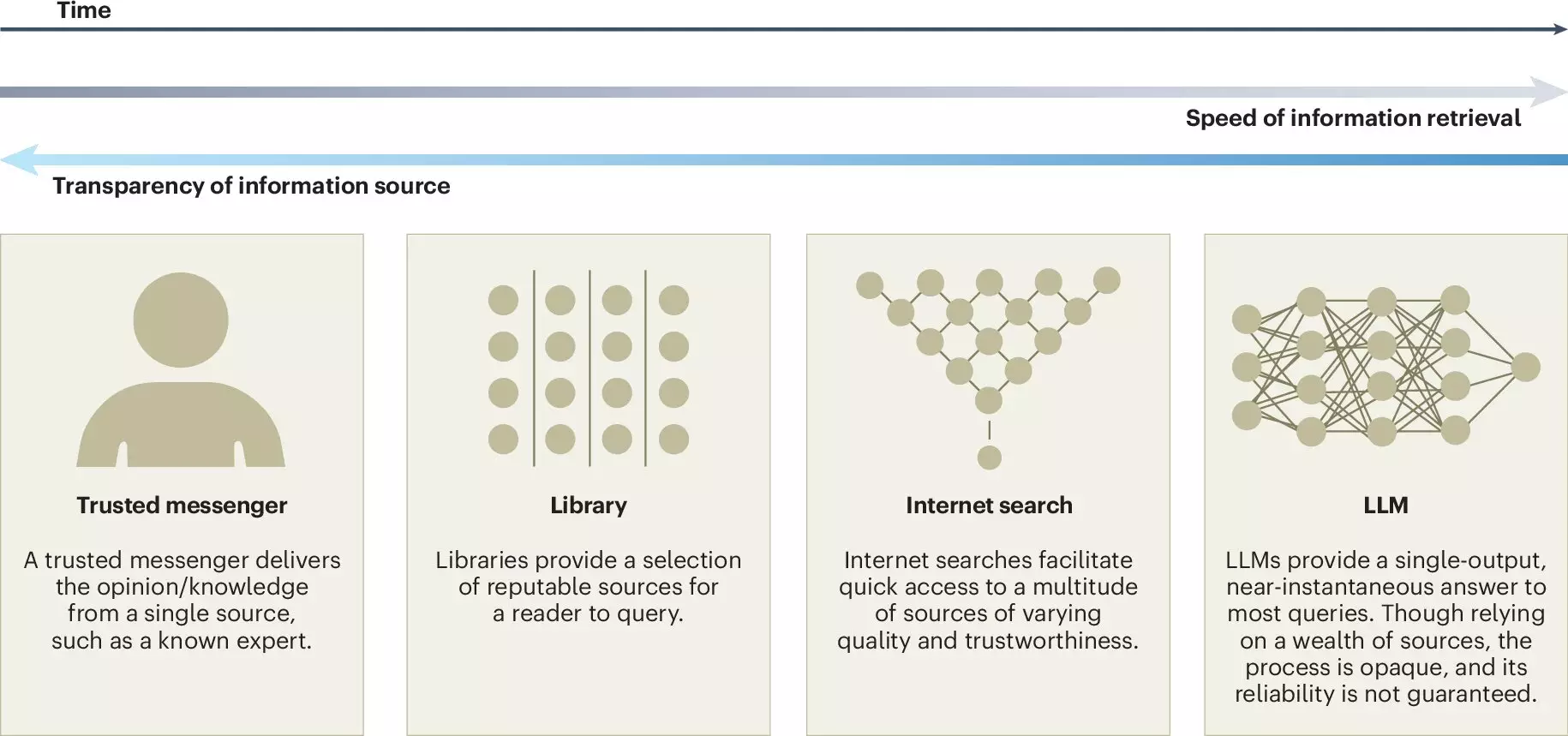

Given these intricacies, the authors of the article advocate for a proactive approach to the development of LLMs. Transparency in disclosing the sources of training data and the methodologies employed in creating these models is paramount. External audits and regular monitoring serve as essential mechanisms to assess and mitigate the consequences of LLM deployment in various contexts. Additionally, articulating clear guidelines for credit and accountability is crucial, especially in scenarios where LLMs co-create knowledge alongside human contributors.

The article also emphasizes the importance of human involvement in the development of LLMs, with a focus on ensuring diversity in the representation of knowledge. By grappling with how to navigate these issues, researchers and developers can better understand the role of LLMs in shaping the future of collective intelligence.

The research presented in *Nature Human Behaviour* sheds light on the dual-edged nature of LLMs—while they hold the promise of enhancing collective intelligence, they also introduce substantial risks that must not be overlooked. As society increasingly integrates these advanced technologies into our collective processes, we must remain vigilant. Striking the right balance will require ongoing dialogue, ethical considerations, and a commitment to fostering an inclusive knowledge landscape that truly empowers all voices. The time has come for us to think critically about how we can shape the role of LLMs for a more collaborative and equitable future.