In recent years, the field of speech emotion recognition (SER) has made remarkable strides, owing largely to the implementation of deep learning technologies. These advancements have unlocked new possibilities across various sectors, including mental health, customer service, and interactive AI. However, with these transformative capabilities come significant risks. Researchers have increasingly focused on understanding how susceptible these models are to adversarial attacks, which can compromise their efficacy and reliability.

Adversarial attacks involve the manipulation of input data to deceive machine learning models into making incorrect predictions. They can be broadly categorized into two types: white-box and black-box attacks. White-box attacks grant the adversary full access to the model’s architecture and parameters, while black-box attacks operate with limited knowledge, relying solely on the model’s output to generate adversarial examples. The findings of a recent study conducted by a team at the University of Milan highlight the alarming vulnerability of convolutional neural network long short-term memory (CNN-LSTM) models in the realm of SER.

The researchers published their findings in the journal *Intelligent Computing*, emphasizing the significant destabilization of SER performance due to various adversarial attack methodologies. Their study scrutinized multiple datasets, including EmoDB for German, EMOVO for Italian, and RAVDESS for English, employing both white-box and black-box methods, such as the Fast Gradient Sign Method and the Boundary Attack.

Remarkably, despite having complete access to the model’s details, white-box attacks did not consistently outperform black-box techniques. This revelation is particularly concerning as it suggests that adversaries can craft effective perturbations without needing an intricate understanding of the model’s inner workings. This opens doors for less technically proficient attackers to carry out successful disruptions merely by observing the model’s outputs.

Gender and Language Considerations

A critical aspect of this research also involved examining how adversarial attacks differentially affect various genders and languages. While the overall variance across languages was minimal, the study revealed that English models tended to be the most vulnerable, while Italian exhibited relatively greater resilience. In gender-specific evaluations, the analysis suggested that male speech samples had slightly superior accuracy, although the differences were marginal. Despite these variations, the uniformity of the impact across genders points to a broader pattern: all SER models, regardless of their specific attributes, exist in a precarious state of vulnerability.

Methodological Rigor and Consistency

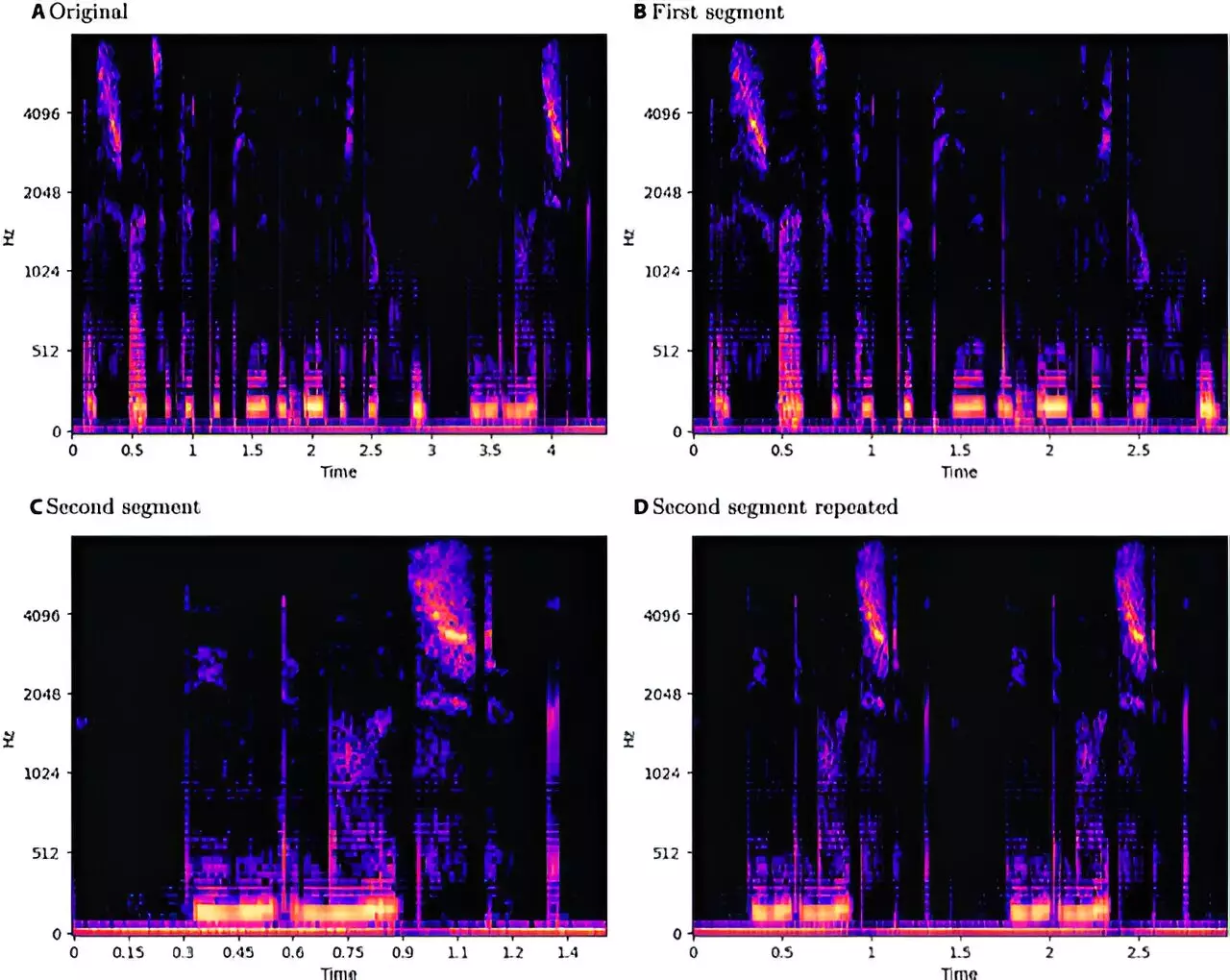

To ensure consistency in their analysis, the research team developed a systematic approach for data processing and feature extraction. Utilizing log-Mel spectrograms, they augmented their datasets using techniques like pitch shifting and time stretching, ensuring that all samples were standardized to a three-second duration. This meticulous methodology not only enhances the reproducibility of the findings but also contributes to a deeper understanding of how different factors impact model performance under adversarial conditions.

Contrary to the notion that revealing vulnerabilities could arm potential adversaries, the authors of the study advocate for transparency in the research community. By openly discussing weaknesses, both defenders and attackers can gain a clearer understanding of the vulnerabilities inherent in SER systems. This collective knowledge is vital for developing more robust defenses. Rather than shielding information that could be exploited, sharing insights allows for proactive measures to strengthen SER technologies, ultimately fostering a more secure technological environment.

As SER continues to evolve, it is crucial for researchers, developers, and practitioners to remain aware of the risks associated with deep learning models. The findings from the University of Milan serve as a clarion call for the community to not only recognize these vulnerabilities but to actively seek solutions that can mitigate the threat posed by adversarial attacks. By prioritizing transparency and collaborative efforts towards fortifications, the potential of SER can be realized safely and securely, ushering in a new era of innovation that respects both user experience and technological integrity.